发布时间: 2019-09-18 17:06:15

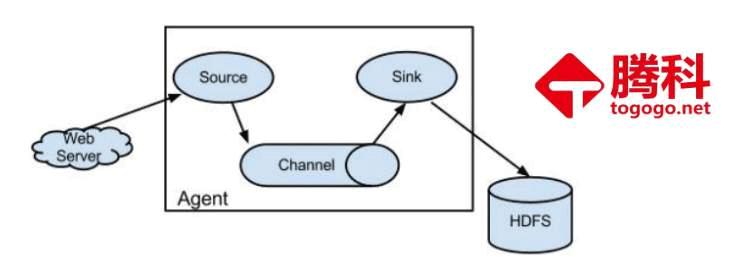

③ Channel:angent 内部的数据传输通道,用于从 source

flume 接收telnet网络数据

vi netcat-logger.conf # 定义这个agent中各组件的名字 a1.sources = r1 a1.sinks = k1 a1.channels = c1 # 描述和配置source组件:r1 a1.sources.r1.type = netcat a1.sources.r1.bind = localhost a1.sources.r1.port = 44444 # 描述和配置sink组件:k1 a1.sinks.k1.type = logger # 描述和配置channel组件,此处使用是内存缓存的方式 a1.channels.c1.type = memory a1.channels.c1.capacity = 1000 a1.channels.c1.transactionCapacity = 100 # 描述和配置source channel sink之间的连接关系 a1.sources.r1.channels = c1 a1.sinks.k1.channel = c1 |

启动Flume

[hd@Master flume]# flume-ng agent -c /home/hd/apps/flume/conf/ -f example/netcat-logger.conf -n a1 -Dflume.root.logger=INFO,console

Flume采集日志文件到HDFS

# Name the components on this agent a1.sources = r1 a1.sinks = k1 a1.channels = c1 # Describe/configure the source #监听目录,spoolDir指定目录, fileHeader要不要给文件夹前坠名 a1.sources.r1.type = spooldir a1.sources.r1.spoolDir = /home/hadoop/flumespool a1.sources.r1.fileHeader = true # Describe the sink a1.sinks.k1.type = logger # Use a channel which buffers events in memory a1.channels.c1.type = memory a1.channels.c1.capacity = 1000 a1.channels.c1.transactionCapacity = 100 # Bind the source and sink to the channel a1.sources.r1.channels = c1 a1.sinks.k1.channel = c1 |

启动Flume

[hd@master flume]# flume-ng agent -c /home/hd/apps/flume/conf/ -f example/spool-logger.conf -n a1 -Dflume.root.logger=INFO,console

上一篇: 人工智能AI培训_机器学习之特征工程

下一篇: 大数据培训_Hive 常用函数